Your Google indexing is of paramount importance for SEO. Indexing means making your website visible across the web and therefore being seen by potential customers. These web crawlers follow the link of pages and bring all the data to the Google’s server. If the search engine isn’t indexing your website you are, to all intents and purposes, invisible on the net and losing organic traffic to your website. So let’s find out why your website isn’t getting indexed.

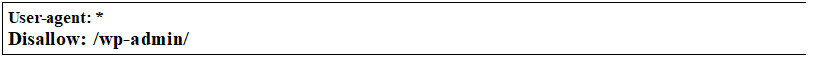

Blocked by robots.txt

Robots.txt is the first thing that a web crawler looks for when visiting a website. The purpose of this file is to tell robots which pages you would like them to visit and if your developer or editor has blocked your website using the robots.txt files, web crawlers will not index your website. If they have used the file incorrectly, it could end up telling search engines not to crawl your website which that means web pages won’t appear in the search results. You should always use the correct file format which looks like:

Noindex in meta tags

It is common practice for webmasters to use a meta ‘robots’ tag to prevent bots from crawling a particular web page. So if you find a particular web page that doesn’t get indexed by a search engine it is worth checking the theme header for this tag.

![]()

As soon as this tag is removed from the source code, the bots can crawl the particular web page.

Sitemap Errors

Sitemaps are a path to search engines, they provide a list of URLs to be crawled on your website. If your sitemap isn’t updating automatically, the search engines may take time to reveal the pages, resulting in users ‘bouncing’ off the site as they get impatient – you should consider checking the Search Console for related issues. This will help you rectify sitemap issues.

AJAX Applications

If your website has AJAX URLs bots will not crawl the content of these pages. This in turn will prevent the indexing of your website. There is a different method to get indexed the content of AJAX applications and the Google Developers solution will help fix this issue.

SEO Spamming

There are lots of SEO techniques that don’t fall under Google guidelines. Google prioritise the quality of a website and takes manual action on sites that employ spamming techniques. Shortcut / black hat methods to rank higher could result in permanent de-indexing of your website.